Let’s talk about the Ceph nodes a little, because it took me some time to better understand what is required to build a working Ceph cluster.

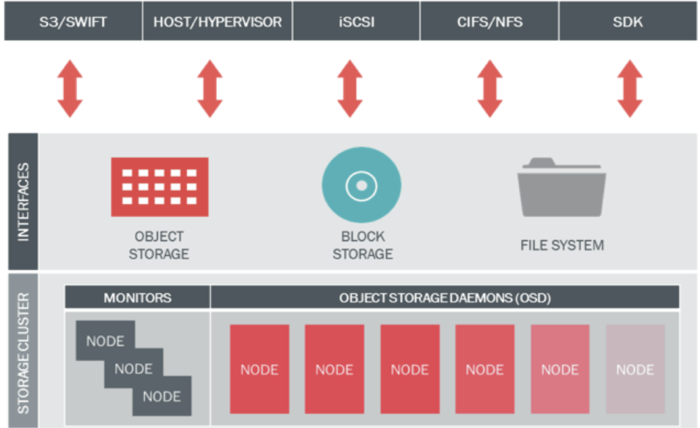

In summary Ceph is built from the following components which can run on separate nodes or can run all on one node, which is not really recommended from obvious reasons.

1. Ceph OSD ( Object Storage Daemons ) storage data in objects , manages data replication , recovery , rebalancing and provides stage information to Ceph Monitor. Its recommended to user 1 OSD per physical disk.

2. Ceph MON ( Monitors ) maintains overall health of cluster by keeping cluster map state including Monitor map , OSD map , Placement Group ( PG ) map , and CRUSH map. Monitors receives state information from other components to maintain maps and circulate these maps to other Monitor and OSD nodes.

3. Ceph RGW ( Object Gateway / Rados Gateway ) RESTful API interface compatible with Amazon S3 , OpenStack Swift.

4. Ceph RBD ( Raw Block Device ) Provides Block Storage to VM / bare metal as well as regular clients , supports OpenStack and CloudStack . Includes Enterprise features like snapshot , thin provisioning , compression.

5. CephFS ( File System ) distributed POSIX NAS storage.

Possible Ceph designs to start with:

1. I would recommend to start with 3 Monitors (MON) and 3 OSDs nodes. This design gives you very flexible cluster with very good performance and redundancy. The down side is that you will need a lot of hardware for this concept. However if you plan to run large cluster, this is the best start.

2. You can have both Monitors and OSDs on the same node if you don’t have the hardware to start with. This way you can build Ceph cluster on 3 nodes.

3. For those people who are really constrained by resources, they can install monitors as virtual machines and have 2 OSD nodes on physical servers. This is how I started. A lot of people who want to discover Ceph might not have the resources necessary to start with, so this model can solve the problem.

Monitor

A minimum of three monitors nodes are needed for a cluster quorum. Ceph monitor nodes are not resource hungry they can work well with fairly low cpu and memory. Run monitors on distant nodes rather on all on one node. In case you want to run monitors on virtual machines, make sure each monitor runs on different physical host to prevent single point of failure. By the way, you have to start with building monitors.

Admin Node

Let’s not forget about Admin Node. This can be a machine which you use just for Ceph node deployment. Does not need to run any compoments. In my case I run Admin node on first monitor node, but after using Ceph for some time, I have to admit that having Admin node separate might not be a bad idea for large clusters.

I hope you enjoyed this part. Next time we will setup some nodes already.